What Is Agentic AI?

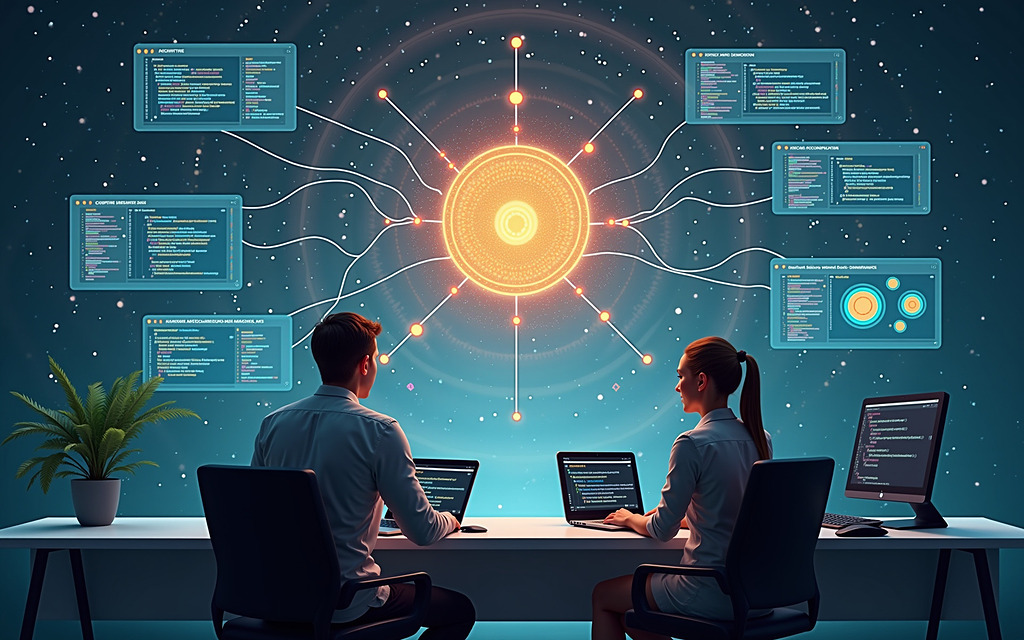

Agentic AI refers to a class of AI systems capable of autonomously perceiving, planning, and acting to achieve complex goals with minimal human supervision. Unlike traditional AI agents that are task-specific and reactive, Agentic AI systems are goal-driven, adaptive, and self-directed.

They represent a shift from prompt-based AI to autonomous agents that can operate in dynamic environments, make decisions, and interact with tools and systems to complete tasks end-to-end.

Core Architecture of Agentic AI

Agentic AI systems are typically composed of the following layers:

- Large Language Models (LLMs): For reasoning, planning, and communication.

- Persistent Memory: To retain context, learn from past actions, and adapt over time.

- Tool Use: Integration with APIs, databases, browsers, and other digital tools.

- Orchestration Layer: Manages task decomposition, execution, and coordination across multiple agents or tools.

This layered architecture enables agents to operate autonomously across diverse domains.

The Agentic AI Loop

Agentic AI operates in a continuous feedback loop:

- Observe: Gather data from the environment or user input.

- Plan: Formulate a strategy or sequence of actions.

- Act: Execute tasks using tools or APIs.

- Evaluate: Assess outcomes and adjust behavior.

- Repeat: Iterate until the goal is achieved.

This loop allows agents to adapt in real time and handle complex, evolving tasks.

Challenges and Considerations

Despite their potential, Agentic AI systems face several challenges:

- Emergent Behavior: Unpredictable actions due to complex reasoning chains.

- Coordination Failures: In multi-agent systems, agents may conflict or duplicate efforts.

- Security Risks: Tool access must be sandboxed to prevent misuse.

- Ethical Concerns: Decisions made by agents must be transparent and fair.

-

Final Thoughts

Agentic AI represents a profound shift in how we design and interact with intelligent systems. By moving beyond static prompts and reactive models, we are entering an era where AI can reason, act, and adapt — not just assist.

This evolution opens up extraordinary possibilities: from autonomous research assistants to self-managing infrastructure and intelligent customer service agents. But with this power comes responsibility. As we build more capable agents, we must also build ethical frameworks, robust safeguards, and transparent systems that align with human values.

The future of AI is not just about intelligence — it’s about agency. And how we shape that agency will define the next chapter of human–machine collaboration.